Imagine your application is a rockstar, performing sold-out shows worldwide! But instead of cheering fans, you have frustrated users dealing with lag and slow loading times. This is where AWS Global Accelerator comes in, your trusty roadie ensuring your app’s performance is smooth sailing across the globe.

Today, we’ll dive deep into AWS Global Accelerator key features, understand how it compares to CloudFront, and wrap it up with some pro tips to boost your AWS Certification journey. Buckle up and get ready to unleash the power of global application performance!

AWS Global Accelerator is a networking service that exceed traditional limitations by enhancing the performance of a wide range of applications that utilize the TCP or UDP protocol. This is achieved through a technique called “edge proxying.”

Imagine strategically positioned outposts along a global network. These outposts, known as edge locations, intercept incoming user requests. Global Accelerator then intelligently analyzes factors like user location, network conditions, and application health to determine the optimal endpoint within your AWS infrastructure (potentially across multiple regions).

You can easily get started with AWS Global Accelerator using these steps:

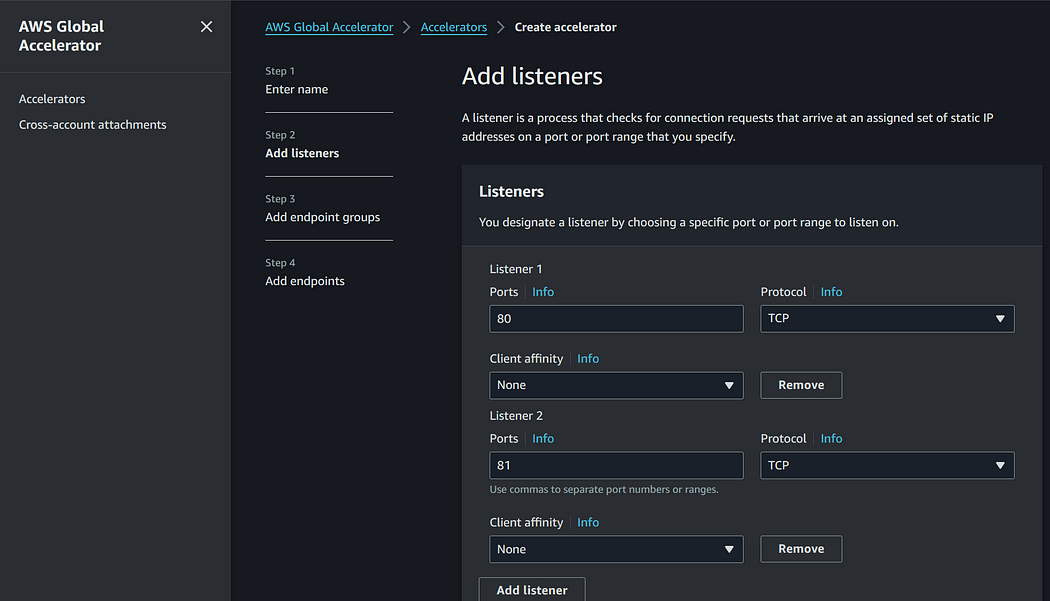

- Create an accelerator using AWS console. 2 Static IP addresses will be provisioned for you.

- Configure endpoints Groups. You choose one or more regional endpoint groups to associate to your accelerator’s listener by specifying the AWS Regions to which you want to distribute traffic. Your listener routes requests to the registered endpoints in this endpoint group. You can configure a traffic dial percentage for each endpoint group, which controls the amount of traffic that an endpoint group accepts.

- Register endpoint for endpoint groups: Assign regional resources (ELB, Elastic IP, EC2, NLB) in each endpoint group. You can also set up weight to choose how much traffic will reach each service.

Key Features of AWS Global Accelerator:

- Static IP Addresses: No more fumbling with complex regional addresses. Global Accelerator gives your app a permanent, recognizable stage presence.

- Global Network Routing: Think of it as a teleport for your data! Global Accelerator whisks user requests to the closest AWS location, ensuring the fastest possible connection.

- Instant Failover: Is one of your application’s servers having an off night? No worries! Global Accelerator seamlessly redirects traffic to healthy backups, keeping the show running smoothly.

- Traffic Dial: Need to control the flow of users for A/B testing or a new feature rollout? Global Accelerator’s handy traffic dial lets you adjust the audience size for a specific region, like dimming the lights before a special announcement!

- Weighted Traffic Distribution: Have multiple versions of your application across different regions? Global Accelerator acts like a spotlight operator, directing the right amount of users to each version based on your preferences.

Perfect, but wait. Isn’t it very close to AWS CloudFront. I got the same feeling and it’s a great question to ask.

Indeed AWS Global accelerator and AWS CloudFront are quite similar and they both use the edge location. But here few differences that will help you to decide which one to use:

- AWS CloudFront is a content delivery network CDN that improves performance for both cacheable content (such as images and videos) and dynamic content (such as API acceleration and dynamic site delivery). CloudFront is used with TCP protocol. It provide Lambda@Edge and CloudFront functions to intercept request and execute short code.

- AWS Global Accelerator is a networking service that is a good fit for non-HTTP use cases, such as gaming (UDP), IoT (MQTT), or Voice over IP. It’s good also for HTTP use cases that require Static IP addresses or deterministic. Global Accelerator doesn’t provide any caching feature. It is a good fit for fast regional failover.

AWS Certification Champs, Take Note !

- The Global accelerator is used with TCP and UDP traffic. It doesn’t provide caching, neither lambda function on the edge

- AWS global accelerator can be used to remove the hassle of managing a scaling number of IP addresses and related security.

- With Global Accelerator, you can add or remove endpoints in AWS Regions, run blue/green deployment, and do A/B testing without having to update the IP addresses in your client applications.

- AWS Global accelerator provides great acceleration for latency-sensitive applications

- It’s possible to dynamically route multiple users to specific endpoint IP and port behind the accelerator. User traffic can be routed to a specific Amazon EC2 IP in single or multiple AWS regions. An example is a multi-player game where multiple players are assigned to the same session. Another example is VoIP or social media app that assign multiple users to a specific media server to initiate voice or video call session.

- Global Accelerator creates a peering connection with your Amazon Virtual Private Cloud using private IP addresses, keeping connections to your internal Application Load Balancers or private EC2 instances off the public internet.

- AWS Global accelerator improves performance for VoIP, online gaming and IoT sensors apps.

- You can’t directly configure on-premises resources as endpoints for your static IP addresses, but you can configure a Network Load Balancer (NLB) in each AWS Region to address your on-premises endpoints. Then you can register the NLBs as endpoints in your AWS Global Accelerator configuration.

P.S. That’s all for today, don’t forget to share your thoughts and experience with AWS Global Accelerator in the comment below!