Pretrained Large Language Models LLMs are increasingly used and are known with their astonishing performances. While LLMs is continuously improving, it’s still limited in specific domains like mathematics and medicine. Various research has been dedicated to equipping LLMs with external tools and techniques providing more flexibility and enhancing the capabilities of large language models.

In this post, I’m excited to present Generated Knowledge Prompting (GKP) and Knowledge-based Tuning techniques. They are distinct in their approaches, these methods share the common goal of refining model performance and expanding its comprehension across various domains.

Generated Knowledge Prompting (GKP)

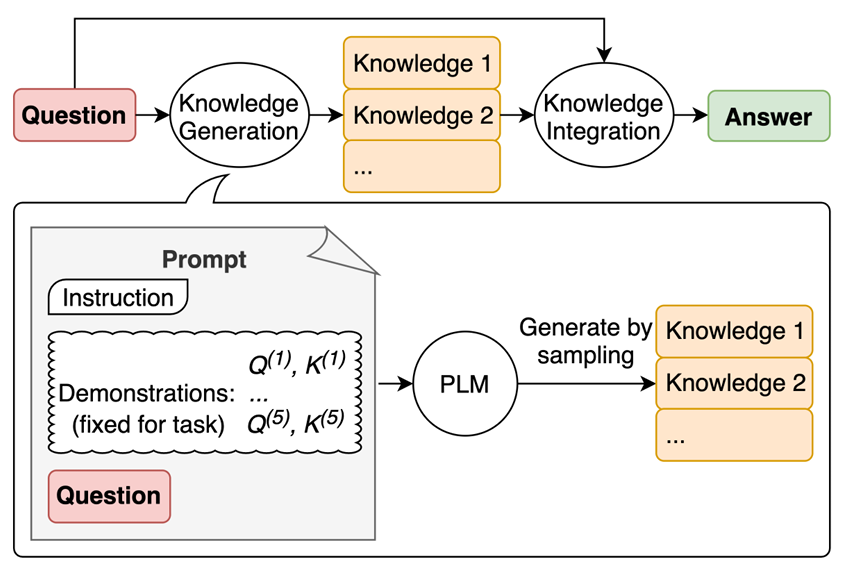

GKP is a prompting technique proposed by Liu et al. The key insight behind Generated Knowledge Prompting is to be able to generate useful knowledge from another language model or external sources, then provide the knowledge as an input prompt that is concatenated with a question.

The intention behind this technique is to steer the model’s learning process towards specific types of outputs. By crafting prompts that target particular knowledge domains or concepts, GKP effectively guides the model to understand and generate relevant information.

Consider a scenario where a language model needs to grasp medical terminology and concepts. Through generated Knowledge Prompting, the first step is to generate the medical knowledge. The second is to integrate the knowledge as an input in the prompts. The model will analyze the prompt and use its underlying medical knowledge to generate text that addresses the query or provides information on the specified topic. This method harnesses the power of structured prompts to shape the model’s understanding and output generation capabilities.

Knowledge-Based Tuning

On the other hand, knowledge-based tuning involves fine-tuning a pre-trained model by integrating external data or information from knowledge sources. This process seeks to augment the model’s performance on specific tasks or domains by enriching its understanding with additional knowledge.

Let’s take an example of legal texts. If a language model requires proficiency in comprehending legal language, knowledge-based tuning can be employed. By exposing the model to a dataset comprising legal documents, the model can assimilate legal terminology and concepts, thereby enhancing its ability to interpret and generate responses in legal contexts.

Difference Between the Two Techniques

While both Generated Knowledge Prompt GPK and Knowledge-based Tuning contribute to enhancing language models, they differ in their methodologies and objectives. GKP focuses on guiding the model’s learning trajectory through tailored prompts. Knowledge is provided to the LLM as an input, while knowledge-based tuning modifies the model or its training process to incorporate external knowledge directly. This results in a fine-tuned model.

In summary, Generated Knowledge Prompting and knowledge-based tuning represent complementary approaches to empowering language models with deeper comprehension and proficiency across diverse subject matters.

That’s all for today. I will share more knowledge around LLM. So don’t miss coming posts 🎉. Follow and Join the conversation with your experiences/suggestion in the comments below 🌸

No comments :

Post a Comment